Trying to download the cow genome: where's the beef?

Cows on the UC Davis campus, photo by Keith Bradnam

I want to download the cow genome. A trip to bovinegenome.org seemed like a good place to start. However, the home page of this site is quick to reveal that there is not only assembly Btau_4.6 that was released by the Baylor College of Medicine Human Genome Sequencing Center, there is also an alternative UMD3.1 assembly by the Center for Bioinformatics and Computational Biology (CBCB) at the University of Maryland.

In many areas of life, choice is a good thing. But in genomics, trying to decide which of two independent genome resources to use, well that can be a bit of a headache. The bovinegenome.org site tells me that 'Btau_4.6' was from November 2011. I have to go to the CBCB site to find out that UMD3.1 was made available in December 2009.

Bovinegenome.org helpfully provides a link to the UMD3.1 assembly. Not so helpfully, the link doesn't work. At this point I decide to download the 'Btau_4.6' assembly and associated annotation. The Btau downloads page provides a link to the Assembly 4.0 dataset (in GFF3 format) under a heading of 'Datasets for Bovine Official Gene Set version 2'. So at this point, I have to assume that maybe that the '4.0' might really be referring to the latest 4.6 version of the assembly.

The resulting Assembly 4.0 page has links for each chromosome (available as GFF3 or gzipped GFF3). These links include a 'ChrUn' entry. You will see this in most modern genome assemblies as there is typically a lot of sequence (and resulting annotation) that is 'unplaced' in the genome, i.e. it cannot be mapped to a known chromosome. In the case of cow, ChrUn is the second largest annotation file.

This page also has four more download links for what bovinegenome.org refers to as:

- 'Reference Sequences'

- 'CDS Target'

- 'Metazoa Swissprot Target'

- 'EST Target'

From the Assembly 4.0 downloads page (which may or may not mean version 4.6)

No README file or other explanation is provided for what these files contain and if you try to download the GFF3 links (rather than the gzipped versions), you will find that these links don't work. The 'CDS Target' link lets you download a file named 'incomplete_CDS_target.gff3'. I do not know whether the 'incomplete' part refers to individual CDSs (missing stop codons?) or the set of CDSs as a whole. I also do not know what the 'target' part refers to.

The chromosome-specific GFF3 files only contain coordinates of gene features, which utilize several different GFF source fields:

- NCBI_transcript

- annotation

- bovine_complete_cds_gmap_perfect

- ensembl

- fgenesh

- fgeneshpp

- geneid

- metazoa_swissprot

- sgp

Again, no README file is provided to make sense of these multiple sources of gene information. In contrast to the chromosome-specific files, the 'reference_sequences.gff3' and 'incomplete_CDS_target.gff3' contain coordinate information and embedded sequence information in FASTA format (this is allowed in the GFF3 spec).

If you look at the coordinate information in the reference sequence file, you see multiple chromosome entries per biological chromosome. E.g.

From the reference_sequences.gff3 file

I do not know what to make of these.

If you want to get the sequences of all the genes, you have to go to yet another download page at bovinegenome.org where you can download FASTA files for CDS and proteins of either the Full Bovine Official Gene Set v2 or the Subset of Bovine Official Gene Set v2 derived from manual annotations. What exactly is the process that generated the 'manual annotations'? I do not know. And bovinegenome.org does not say.

So maybe the issue here is that I should get the information straight from the horse's — or should that be cow's? — mouth. So I head over to the Bovine Genome Project page at BCM HGSC. This reveals that the latest version of the genome assembly is 'Btau_4.6.1' from July 2012! This would seem to supercede the 2011 'Btau_4.6' assembly (which is not even listed on the BCM HGSC page), but I guess I'll have to download it to be sure. The link on the above BCM HGSC page takes me to an FTP folder which is confusingly named 'Btau20101111' (they started the 2012 assembly in 2010?).

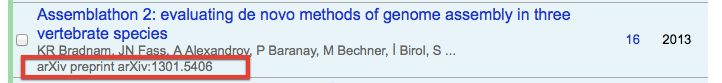

So it seems obvious that all I need to do now is to: check whether the bovinegenome.org 'Assembly 4.0' dataset is really listing files from the 'Btau_4.6' release; find out whether those files are different from the BCM HGSC 'Btau_4.6.1' release; understand what the differences are between the nine different sets of gene annotation; decipher what the 'CDS target' (a.k.a. 'incomplete_CDS_target') file contains, understand why there are 14,195 different 'chromosome' features in the genome GFF file that describes 30 chromosomes; and finally compare and contrast all of these sequences and annotations with the alternative UMD3.1 assembly.

Bioinformatics is fun!

Update 1 — 2014-03-14 14.58 pm: The BCM HGSC has an extremely detailed README file that explains the (slight) differences between Btau_4.6 and Btau_4.6.1 (as well as explaining many other things about how they put the genome together). I like README files!

Update 2 — 2014-03-14 15.18 pm: Of course I could always go the Ensembl route and use their version of the cow genome sequence with their annotations. However, the Ensembl 75 version of the cow genome has been built using the older UMD3.1 assembly, whereas an older Ensembl 63 version of the cow genome that is based on the Btau_4.0 assembly is also available (life is never simple!). One advantage to working with Ensembl is that they are very consistent with their annotation and nomenclature.

Update 3 — 2014-03-14 15.35 pm: I now at least realize why there are 14,195 'chromosome' features in the main GFF file provided by bovinegenome.org. They are treating each placed scaffold as its own 'chromosome' feature. This is particularly confusing because a GFF feature field of 'chromosome' is usually taken to refer to an entire chromosome. GFF feature names should be taken from Sequence Ontology terms (e.g. SO:0000340 = chromosome), so in this case the SO term chromosome_part would be more fitting (and less confusing).

Update 4 — 2014-03-17 11.16 am: I have received some very useful feedback since writing this blog post:

- I was kindly pointed towards the NCBI Genome Remapping Service by @deannachurch (also see her comment below) that would let me project one annotation data from one coordinate system to another. This could be handy if I end up using different cow genomes.

- A colleague of mine pointed me to a great 2009 article by @deannachurch and LaDeanna Hillier that talks about how the simultaneous publication of two cow genomes (from the same underlying data) raises issues about how we deal with publication and curation of such data sets.

- Several people contacted me to give their opinion as to the relative merits of the UMD and the BCM HGSC assemblies.

- Several people also echoed my sentiments about working with the same cow data, but by using Ensembl's architecture instead.

- I was contacted by someone from bovinegenome.org who said that they would fix the issues that I raised. This was after I contacted them to tell them I had blogged about the problems that I had encountered.

The last point is perhaps the most important one which I would like to dwell on for a moment longer. If you are a scientist and experience problems when trying to get hold of data from a website (or FTP site) don't sit in silence and do nothing!

If nobody tells a webmaster that there is an issue with getting hold of data, then the problem will never be fixed. Sometimes files go missing from FTP sites, or web links break, or simple typos make things unclear....the curators involved may never know unless it is pointed out to them.